MMToM-QA Benchmark

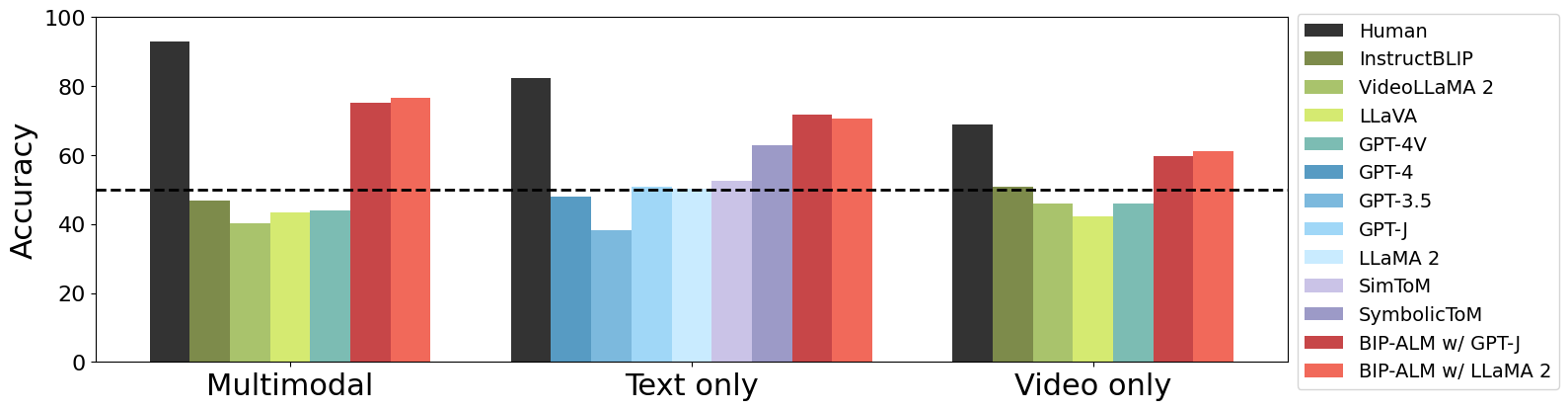

MMToM-QA systematically evaluates the cognitive ability to understand people's minds both on multimodal data and different unimodal data. MMToM-QA consists of 600 questions. The questions are categorized into seven types, evaluating belief inference and goal inference in rich and diverse situations. Each belief inference type has 100 questions, totaling 300 belief questions; each goal inference type has 75 questions, totaling 300 goal questions.

The MMToM-QA benchmark and usage instructions are available in the GitHub repository. A text-only version is also available for download from Hugging Face.

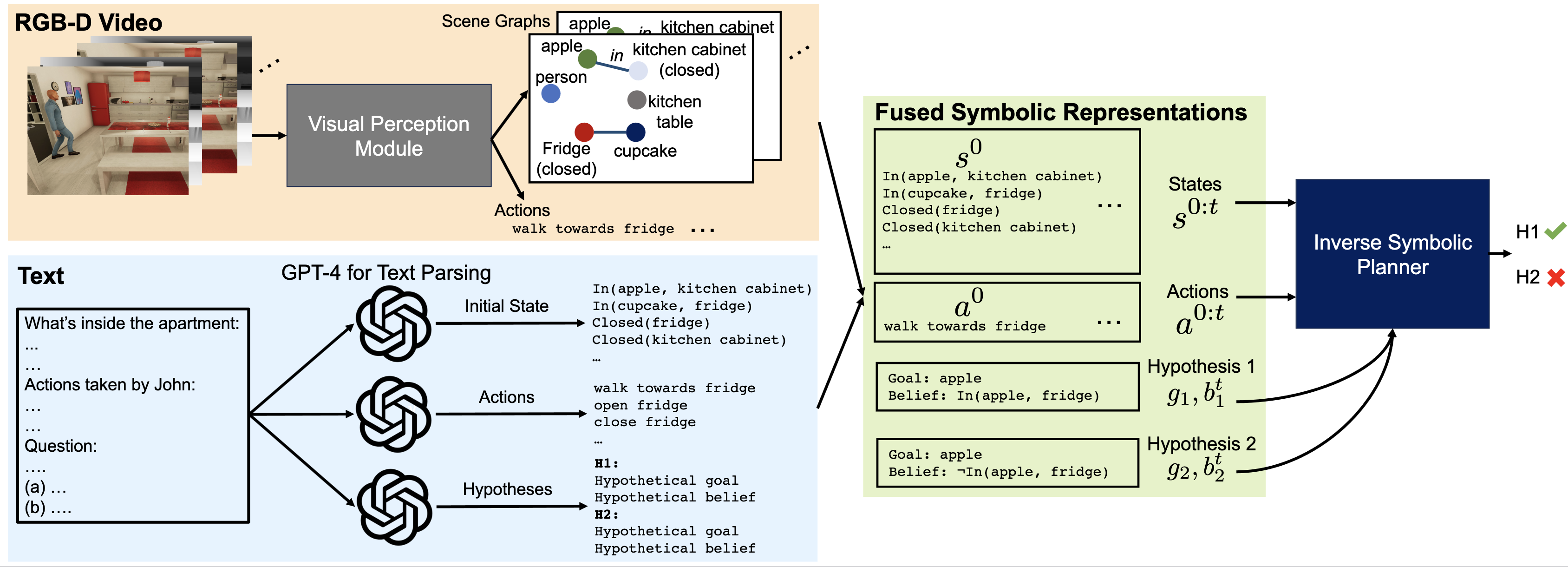

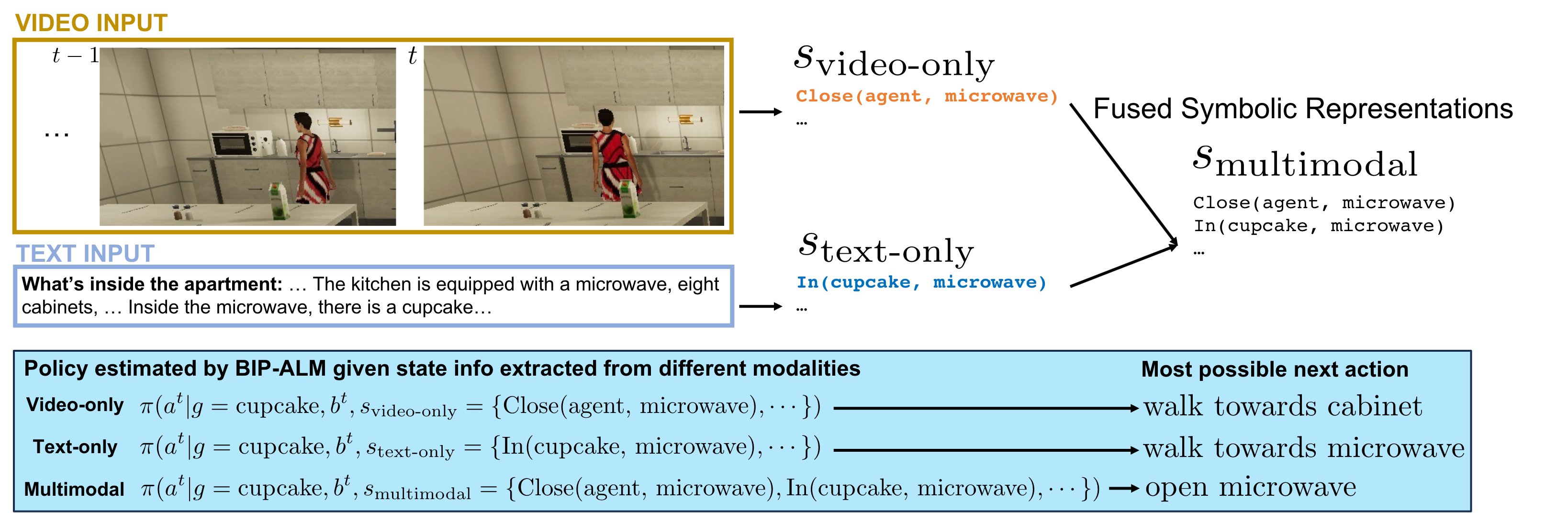

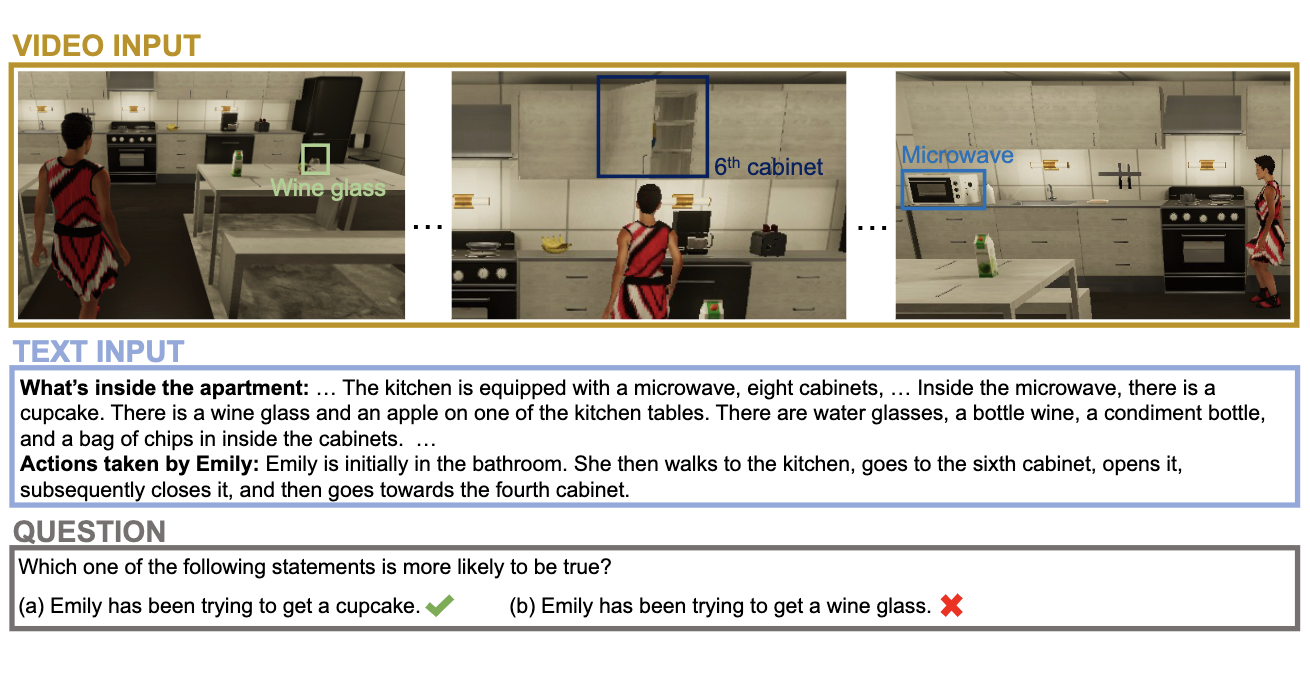

Each question is paired with a clip of the full activity in a video (as RGB-D frames), as well as a text description of the scene and the actions taken by the person in that clip.

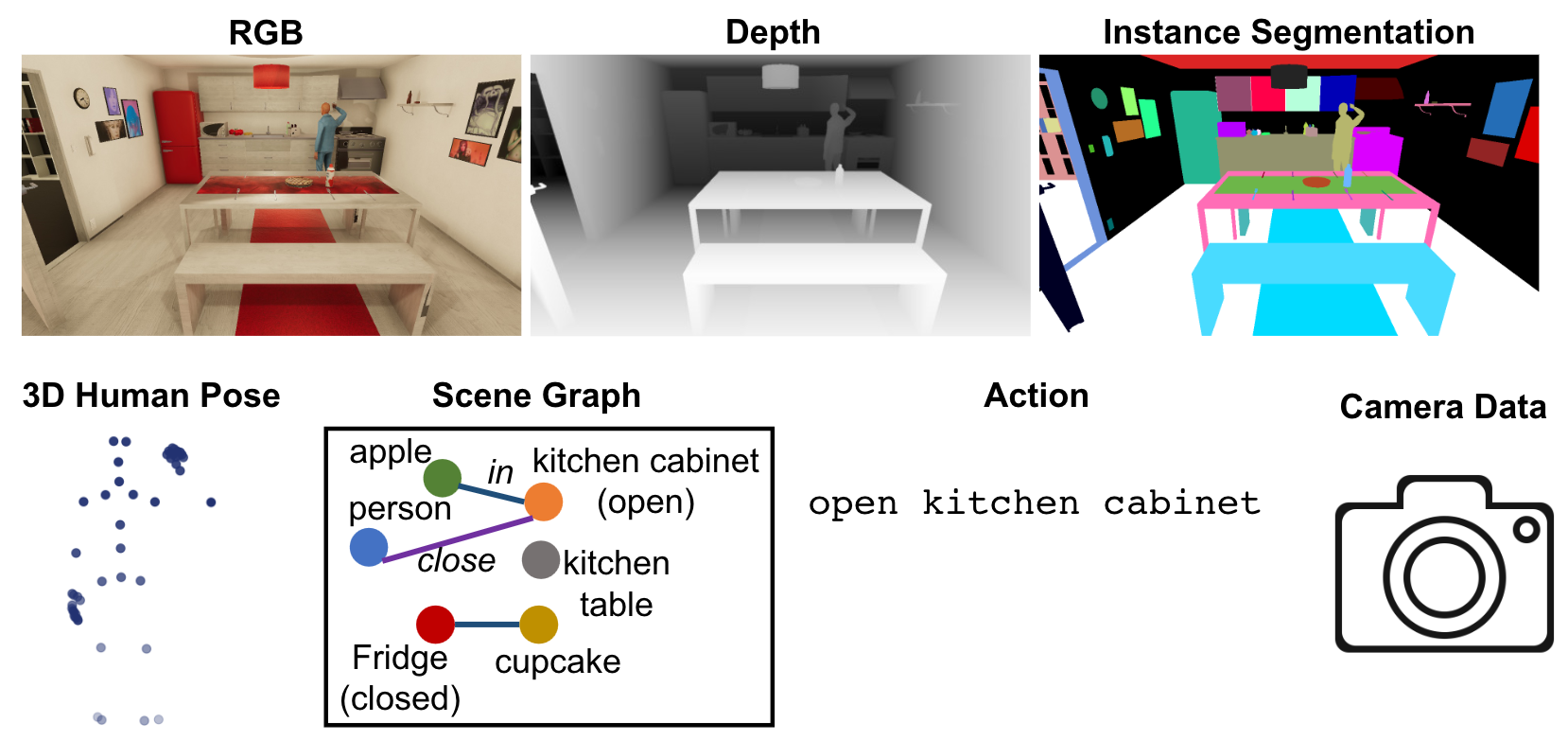

(a) Types of data provided

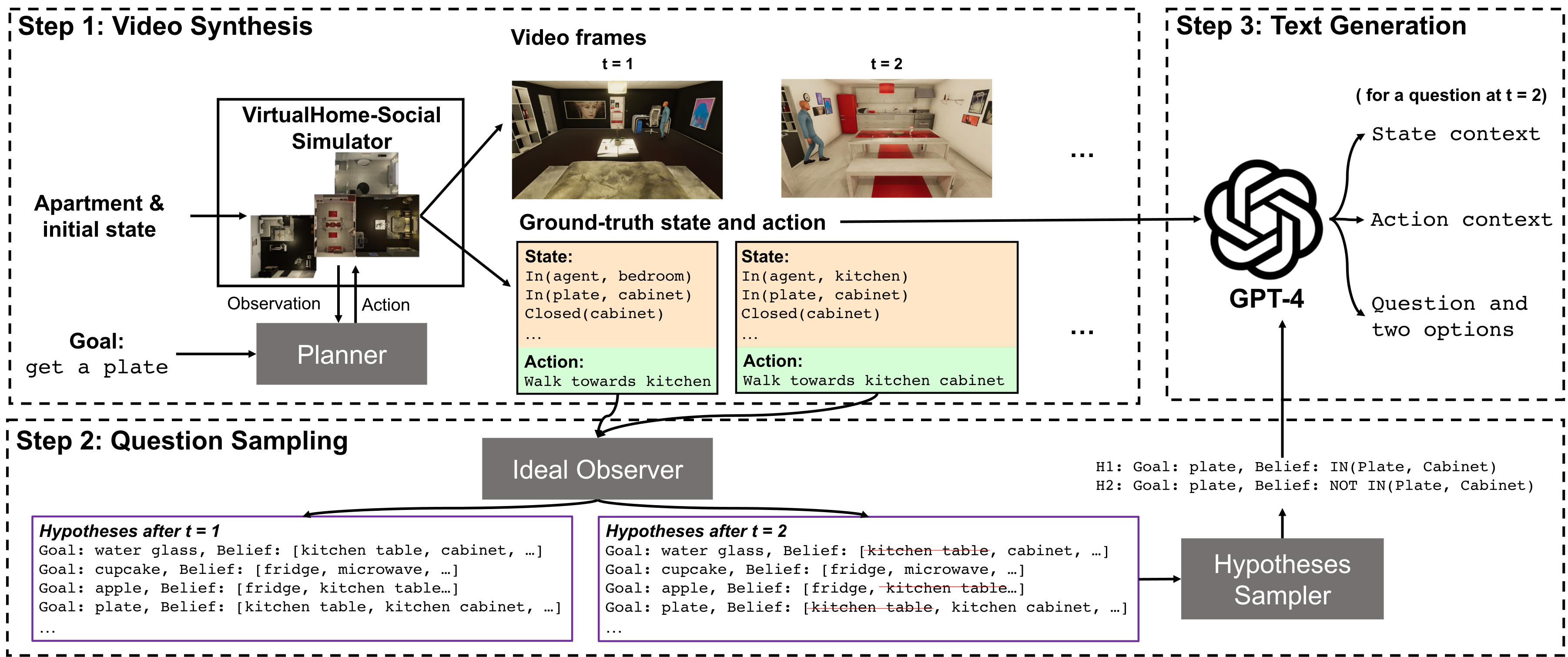

(b) The procedural generation process